NEW: challenge for WAT2021 is open!

Task Description

The goal of the task is to improve translate performance with the help of another modality (images) associated to input sentences.

- Task 1-(a): Multimodal English to Japanese translation (constrained)

- Task 1-(b): Multimodal English to Japanese translation (non-constrained)

- Task 2-(a): Multimodal Japanese to English translation (constrained)

- Task 2-(b): Multimodal Japanese to English translation (non-constrained)

In the constrained setting (a), external resources such as additional data and pre-trained models/embeddings (with external data), are not allowed to use except for the following.

- Pre-trained convolutional neural network (CNN) models (for visual analysis).

- Basic linguistic tools such as taggers, parsers, and morphological analyzers.

There is no limitation for the unconstrained setting (b). In both settings (a) and (b), employed resources should be clearly described in the system description paper.

Data

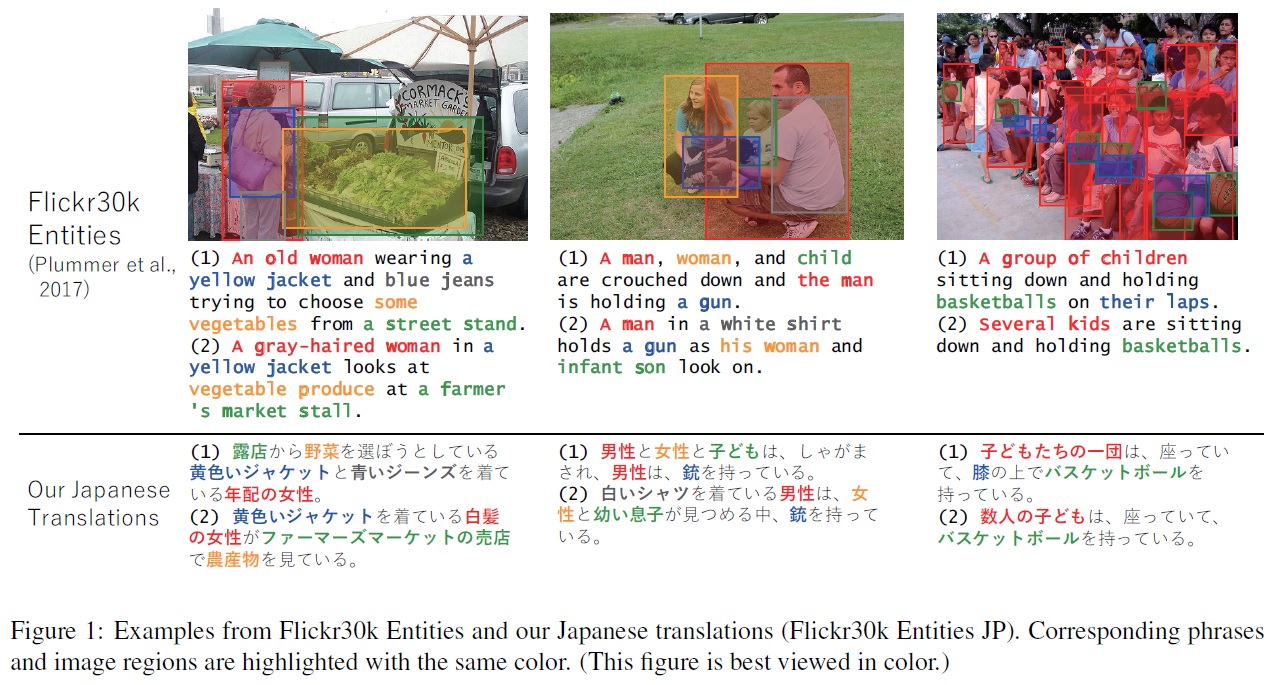

We use the Flickr30kEntities Japanese (F30kEnt-Jp) dataset for this task. This is an extended dataset of the Flickr30k and Flickr30k Entities image caption datasets where manual Japanese translations are newly added. Notably, it has the annotations of many-to-many phrase-to-region correspondences in both English and Japanese captions, which are expected to strongly supervise multimodal grounding and provide new research directions. For details, please visit our project page.

Training and Validation Data

Japanese sentences can be obtained at the above project page of F30kEnt-JP. Note that you also have to visit the authors’ pages of Flickr30k and Flickr30k Entities to download the corresponding images, English sentences and annotations. We use the same splits of training and validation data designated in Flickr30k Entities.

- Japanese Sentences (with phrase-region annotations): Download

- English Sentences (with phrase-region annotations): Visit the page of Flickr30k Entities

- Images: Visit the page of Flickr30k. Images and raw English captions are also available at Kaggle.

- Image IDs for training: train.txt

- Image IDs for validation: val.txt

Please don’t use the samples not included in the training nor in the validation set. They are overlapped with the final testing set.

For each image, you can find the corresponding text files named (Image_ID).txt in Japanese and English sets respectively. While the original Flickr30k has five English sentences for each image, our Japanese set has the translations of the first two sentences of each. So, we are going to use two parallel sentences for each image.

In summary, we have 29,783 images (59,566 sentences) for training and 1,000 images (2,000 sentences) for validation, respectively.

Testing Data

- English to Japanese: en2jp_test.txt

- Japanese to English: jp2en_test.txt

- Image IDs for test: test.txt

Each test file contains 1,000 lines of input sentences corresponding to the images in the same order as test.txt. Note that phrase-to-region annotation is not available in the test data (i.e., only raw texts and images are available to use).

Schedule, Submission, and Evaluation

Please follow the instruction at WAT2020 webpage.

Contact

wat20-mmtjp(at)nlab.ci.i.u-tokyo.ac.jp